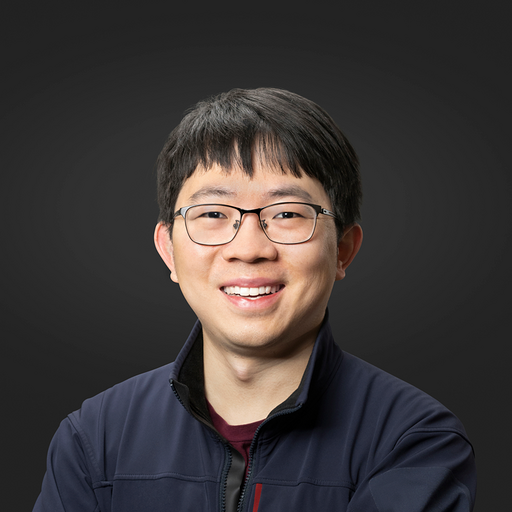

Hao Zhang from Halıcıoğlu Data Science Institute and the Department of Computer Science and Engineering at UC San Diego.

Hao Zhang is an Assistant Professor in Halıcıoğlu Data Science Institute and the Department of Computer Science and Engineering at UC San Diego. Before joining UCSD, Hao was a postdoctoral researcher at UC Berkeley working with Ion Stoica (2021 – 2023). Hao completed his Ph.D. in Computer Science at Carnegie Mellon University with Eric Xing (2014 – 2020). During his Ph.D., Hao took on leave and worked for the ML startup company Petuum (2016 – 2021).

Hao’s research interest is in the intersection area of machine learning and systems. Hao’s past work includes Vicuna, FastChat, Alpa, vLLM, Poseidon, Petuum. Hao’s research has been recognized with the Jay Lepreau best paper award at OSDI’21 and an NVIDIA pioneer research award at NeurIPS’17. Parts of Hao’s research have been commercialized at multiple start-ups including Petuum and AnyScale.