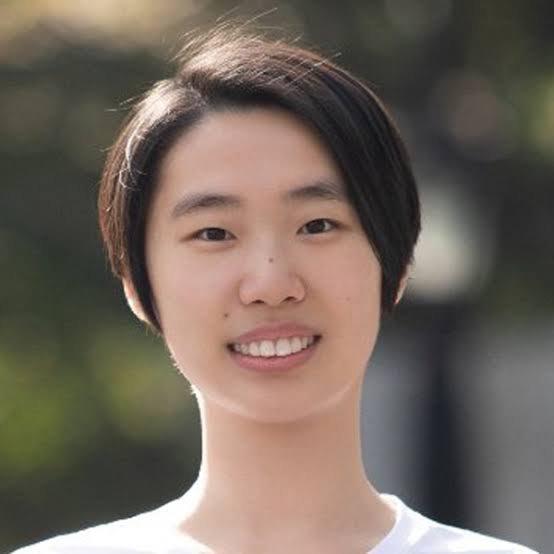

Zhijing Jin

Zhijing Jin (she/her) is an Assistant Professor at the University of Toronto. Her research focuses on Large Language Models and Causal Reasoning, and AI Safety in Multi-Agent LLMs. She has received three Rising Star awards, two Best Paper awards at NeurIPS 2024 Workshops, two PhD Fellowships, and a postdoc fellowship. She has authored over 80 papers, many of which appear at top AI conferences (e.g., ACL, EMNLP, NAACL, NeurIPS, ICLR, AAAI), and her work have been featured in CHIP Magazine, WIRED, and MIT News. She co-organizes many workshops (e.g., several NLP for Positive Impact Workshops at ACL and EMNLP, and Causal Representation Learning Workshop at NeurIPS 2024), and leads the Tutorial on Causality for LLMs at NeurIPS 2024, and Tutorial on CausalNLP at EMNLP 2022. To support diversity, she organizes the ACL Year-Round Mentorship. More information can be found on her personal website: zhijing-jin.com